- Home

- Features

- Business

- Active

- Sports

- Shop

Top Insights

2500 TPS : Hyperledger Fabric v2.5 Performance Optimization Using Caliper and Tape

Hyperledger Fabric v2.5 Performance Optimization Using Caliper and Tape: How I achieved 2500 TPS

Optimize, Benchmark, Succeed: Elevate Your Hyperledger Fabric Performance

Optimizing the performance of a Hyperledger Fabric network requires careful consideration of various components, configurations, and workflows. Here are the key considerations to enhance performance:

Hardware Considerations

- Persistent Storage: Utilize the fastest disk storage available, as Hyperledger Fabric performs extensive disk I/O. For network-attached storage, opt for the highest IOPS possible.

- Network Connectivity: Ensure high-speed connectivity (minimum 1 Gbps) between nodes, as the network is typically distributed across multiple locations.

- CPU and Memory: Allocate sufficient CPU and memory resources to peer and ordering service nodes. Monitoring resource usage is crucial; it’s advisable to maintain usage below 70%-80% of capacity to avoid performance degradation.

Peer Considerations

- Number of Peers: Increasing the number of peers can enhance network performance by balancing transaction endorsement requests across peers.

- Channels per Peer: Each peer can handle multiple channels, but ensure there is a CPU core available for each channel under maximum load to optimize performance.

- Concurrency Limits: Configure concurrency limits in the peer’s settings to prevent overwhelming the system with excessive requests, which can affect throughput. The Peer Gateway Service, first released in v2.4 of Hyperledger Fabric, introduced the gatewayService limit with a default of 500. However, this default can restrict network TPS, so you may need to increase this value to allow more concurrent requests

peer:

# Limits is used to configure some internal resource limits.

limits:

# Concurrency limits the number of concurrently running requests to a service on each peer.

# Currently this option is only applied to endorser service and deliver service.

# When the property is missing or the value is 0, the concurrency limit is disabled for the service.

concurrency:

# endorserService limits concurrent requests to endorser service that handles chaincode deployment, query and invocation,

# including both user chaincodes and system chaincodes.

endorserService: 2500

# deliverService limits concurrent event listeners registered to deliver service for blocks and transaction events.

deliverService: 2500

# gatewayService limits concurrent requests to gateway service that handles the submission and evaluation of transactions.

gatewayService: 500

- CouchDB Cache Settings: If using CouchDB, consider increasing the cache size for frequently accessed keys to reduce database lookups and improve performance.

state:

couchDBConfig:

# CacheSize denotes the maximum mega bytes (MB) to be allocated for the in-memory state

# cache. Note that CacheSize needs to be a multiple of 32 MB. If it is not a multiple

# of 32 MB, the peer would round the size to the next multiple of 32 MB.

# To disable the cache, 0 MB needs to be assigned to the cacheSize.

cacheSize: 64

Orderer Considerations

- Number of Orderers: The performance is influenced by the number of ordering service nodes participating in consensus. A good practice is to start with five nodes to ensure fault tolerance without creating bottlenecks.

- Batch Configuration: Adjust parameters like Max Message Count, Absolute Max Bytes, and BatchTimeout in the channel configuration to optimize block cutting and transaction throughput.

- SendBufferSize: Increase the SendBufferSize parameter in orderer configuration to allow more messages in the egress buffer, enhancing throughput.

Orderer: &OrdererDefaults

# Batch Timeout: The amount of time to wait before creating a batch.

BatchTimeout: 2s

# Batch Size: Controls the number of messages batched into a block.

# The orderer views messages opaquely, but typically, messages may

# be considered to be Fabric transactions. The 'batch' is the group

# of messages in the 'data' field of the block. Blocks will be a few KB

# larger than the batch size, when signatures, hashes, and other metadata

# is applied.

BatchSize:

# Max Message Count: The maximum number of messages to permit in a

# batch. No block will contain more than this number of messages.

MaxMessageCount: 500

# Absolute Max Bytes: The absolute maximum number of bytes allowed for

# the serialized messages in a batch. The maximum block size is this value

# plus the size of the associated metadata (usually a few KB depending

# upon the size of the signing identities). Any transaction larger than

# this value will be rejected by ordering. It is recommended not to exceed

# 49 MB, given the default grpc max message size of 100 MB configured on

# orderer and peer nodes (and allowing for message expansion during communication).

AbsoluteMaxBytes: 10 MB

# Preferred Max Bytes: The preferred maximum number of bytes allowed

# for the serialized messages in a batch. Roughly, this field may be considered

# the best effort maximum size of a batch. A batch will fill with messages

# until this size is reached (or the max message count, or batch timeout is

# exceeded). If adding a new message to the batch would cause the batch to

# exceed the preferred max bytes, then the current batch is closed and written

# to a block, and a new batch containing the new message is created. If a

# message larger than the preferred max bytes is received, then its batch

# will contain only that message. Because messages may be larger than

# preferred max bytes (up to AbsoluteMaxBytes), some batches may exceed

# the preferred max bytes, but will always contain exactly one transaction.

PreferredMaxBytes: 2 MB

Application Considerations

- Database Choice: For high-throughput applications, avoid using CouchDB due to its slower performance compared to LevelDB. Consider off-chain storage solutions for queries that do not require real-time access.

- Chaincode Optimization: Design chaincode queries to limit data return sizes and ensure they are indexed if using JSON queries. This helps avoid timeouts and improves transaction efficiency.

- Peer Gateway Service: Utilize the Peer Gateway Service introduced in v2.4 for improved throughput and reduced complexity in client applications.

- Payload Size Management: Keep payload sizes small by storing large data off-chain and only storing hashes on-chain to prevent performance issues associated with large transactions.

- Chaincode Language Performance: Go chaincode generally offers better performance than Node or Java chaincode, making it preferable for high-throughput applications.

By considering these factors, organizations can effectively optimize their Hyperledger Fabric networks for better performance, scalability, and reliability. Continuous monitoring and adjustments based on usage patterns will further enhance overall efficiency and user experience in blockchain applications.

I have created dedicated course to optimize Hyperledger Fabric network with higher tps.

This course is designed to provide a comprehensive understanding of Caliper/Tape integration with Hyperledger Fabric v2.5.

- Module 1: Introduction to Hyperledger Fabric v2.5 and Performance Optimization

- Module 2: Fabric Network Creation and Configuration

- Module 3: Caliper Configuration and Integration

- Module 4: Tape Configuration and Integration

- Module 5: Performance Optimization Techniques

I tried with two different resources configuration and two network topology

Resource Type

- Macbookk m3 pro 16gb, 10 core

- AWS VM — c5–9xlarge, 36(18 core), 74 GB RAM, Network performance — 12 GigabitStorage — EBS(100 GB)

Network Topology Type

- Three Org(3 peer, 3 couchdb, 6 chaincode container(2 chaincode — ndoe and go) 3 oprderer, )

- Two Org Setup(2 peer, 4 chaincode container(node and go cgainocde) 1 ordered, )

Benchmarking tools

- Caliper

- Tape

Full Course Details

Here are the observations

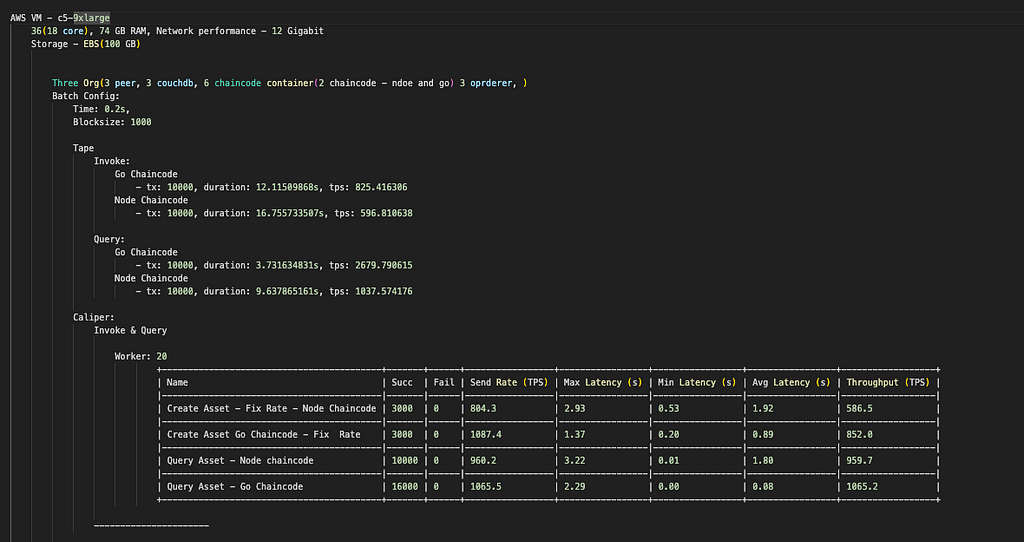

- AWS VM — c5–9xlarge 36(18 core), 74 GB RAM, Network performance — 12 Gigabit Storage — EBS(100 GB)

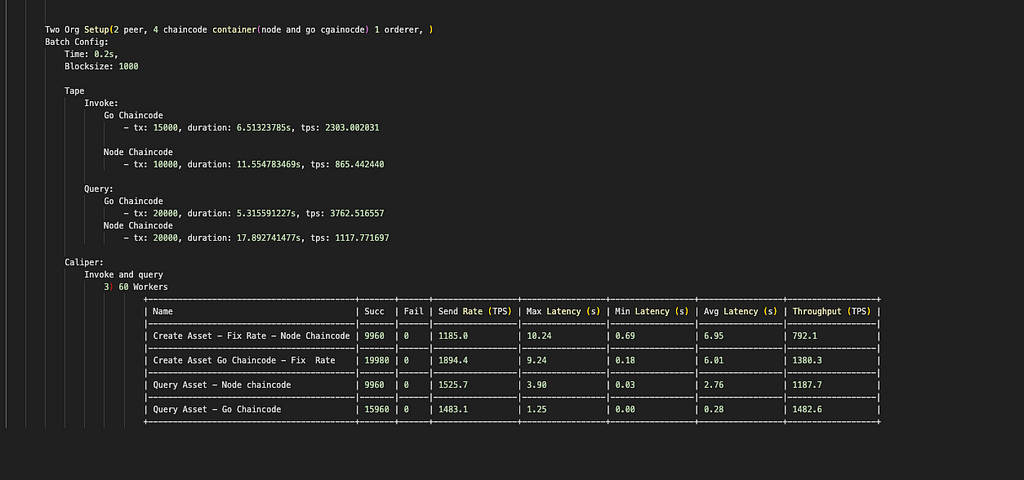

- AWS VM — c5–9xlarge 36(18 core), 74 GB RAM, Network performance — 12 Gigabit Storage — EBS(100 GB)

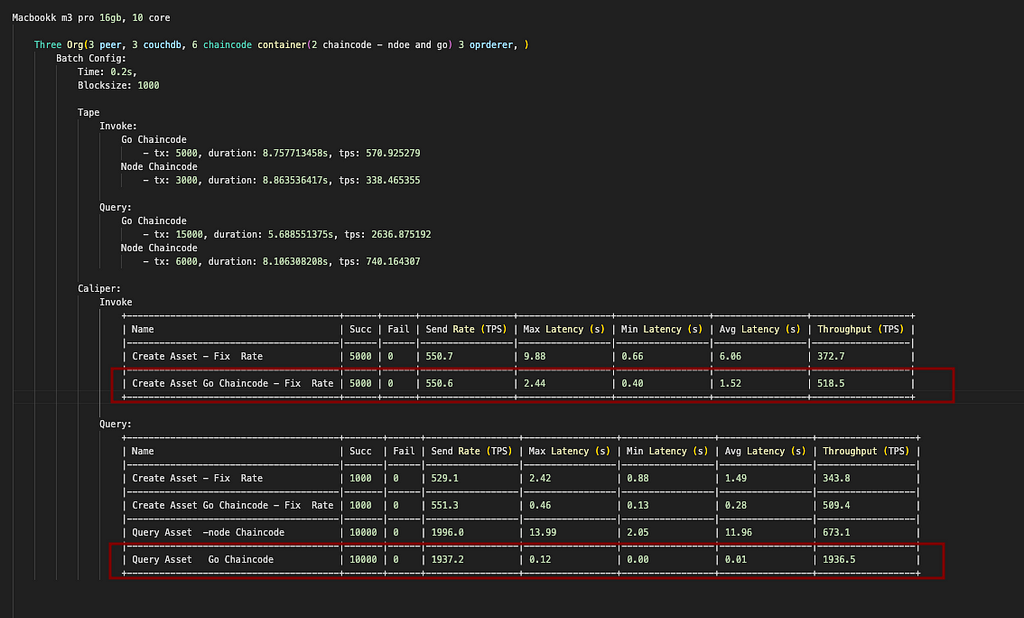

- Macbookk m3 pro 16gb, 10 core

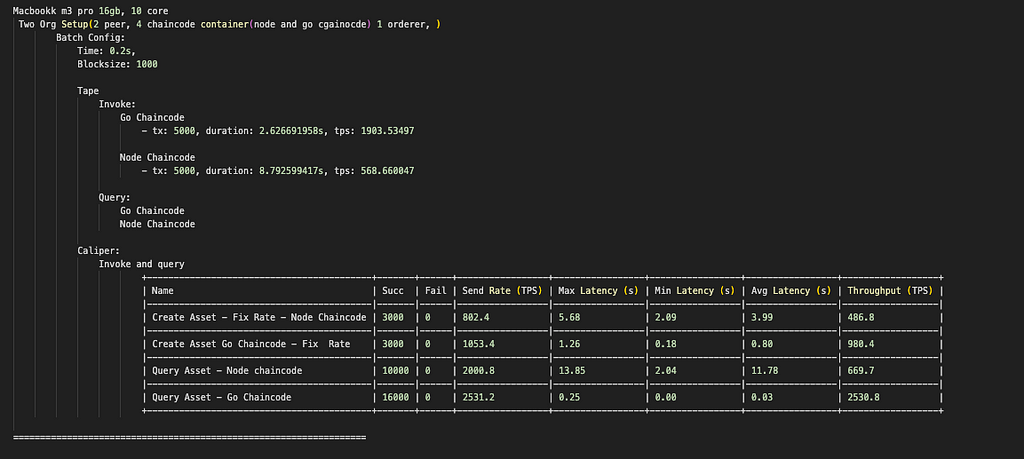

- Macbookk m3 pro 16gb, 10 core

If you have any doubts or issues, please contact me on Instagram or email

insta: Instagram: https://www.instagram.com/pavanadhavofficial/

email: adhavpavan@gmail.com

2500 TPS : Hyperledger Fabric v2.5 Performance Optimization Using Caliper and Tape was originally published in Coinmonks on Medium, where people are continuing the conversation by highlighting and responding to this story.

Recent Posts

Categories

Related Articles

Bitcoin hits record highs, but the next big profits could lie in these 5 cryptos

Bitcoin surges, but five emerging cryptos are gaining attention for their potential...

ByglobalreutersNovember 23, 2024Bitcoin’s MVRV Metric Signals Market Heating Up—Here’s What Investors Should Know

The ongoing Bitcoin bull market has sparked renewed interest in on-chain metrics...

ByglobalreutersNovember 23, 2024Ethereum vs. Solana: Who Will Emerge as the Top Altcoin This Cycle?

The dominance of Ethereum in defi makes it the top choice for...

ByglobalreutersNovember 23, 2024Dogecoin Whales Reactivate After Spending $214.5 Million To Buy 550 Million DOGE

Dogecoin whales are on a significant accumulation spree, as reports reveal that...

ByglobalreutersNovember 23, 2024

Leave a comment